libtins

packet crafting and sniffing library

Benchmarks

In this section you can find some benchmarks which aim at comparing how well does libtins work compared to other libraries that serve its same purpose.

Testing environment

Every benchmark was executed on a computer with the following specs:

- Intel Core i7-2670QM(2.20Hz)

- 8GB DDR3 RAM (1333 MHz)

- Linux mint 17 - 64bits, kernel 3.13.0

- Compiler - gcc 4.8.4

Tested libraries

Benchmarks were executed using the following libraries:

- libtins v4.0 (compiled using the LIBTINS_ENABLE_CXX11 CMake switch)

- libcrafter v0.3

- Pcap++ v17.11

- scapy v2.2

- impacket v0.9.11

- dpkt v1.8

- libpcap v1.5.3

If you want to reproduce the benchmarks, you can download the benchmark project on github.

Note that the libtins, libcrafter and libpcap test cases were compiled using C++11(using the -std=c++11 gcc switch).

Note: the scapy benchmarks look like it takes almost the same time as the impacket ones, but it actually doesn't. In fact, it takes so much time that the maximum Y-axis value had to be restricted. Otherwise the rest of the bars couldn't almost be seen. If you hover over each bar, you will see the actual times in seconds.

Benchmark #1 - Interpreting TCP

In this first benchmark, I generated a pcap file containing 500000 packets generated, using the following template:

EthernetII packet = EthernetII() / IP() / TCP() / RawPDU(std::string(742, 'A'));That means the pcap file will contain 500000 packets, all of them will have an Ethernet II frame, an IP datagrams, a TCP segment, and a payload of 742 bytes at the end of the PDU chain.

The test case developed for every library basically opens the generated

pcap file and interprets each of the packets. Here "interprets" means

creating a the corresponding Ethernet object for each library,

and all of the following PDUs.

The libpcap test is used as a base case; it simply reads the pcap file and counts the number of packets in it. No library that interprets packets should be faster than libpcap, since all of them use it implicitly to read the pcap file.

The benchmark results were the following:

| Library | Time taken(seconds) | Packets per second |

|---|---|---|

| libpcap | 0.141 | 3546099 |

| libtins | 0.273 | 1831501 |

| pcapplusplus | 0.38 | 1315789 |

| dpkt | 8.132 | 61485 |

| libcrafter | 12.209 | 40953 |

| impacket | 18.5 | 27027 |

| scapy | 187.082 | 2672 |

The first column indicates the library tested. The second column indicates the time taken for that library to parse the entire file, and the last one how many packets per second did the library interpret.

Benchmark #2 - Interpreting TCP + TCP Options

This benchmark is very similar to the one above, but in this one, I added

TCP options to the TCP segment. This is the code

that generates each packet:

EthernetII packet = EthernetII() / IP() / TCP() / RawPDU(std::string(742, 'A'));

// Search for the TCP PDU

TCP &tcp = packet.rfind_pdu<TCP>();

// Add several options

tcp.mss(1234);

tcp.winscale(123);

tcp.sack_permitted();

tcp.sack({ 1234, 5678, 91011 });

tcp.timestamp(1, 2);

tcp.altchecksum(TCP::CHK_TCP);The results of this benchmark were the following:

| Library | Time taken(seconds) | Packets per second |

|---|---|---|

| libpcap | 0.145 | 3448275 |

| libtins | 0.364 | 1373626 |

| pcapplusplus | 0.385 | 1298701 |

| dpkt | 8.508 | 58768 |

| libcrafter | 20.199 | 24753 |

| impacket | 44.491 | 11238 |

| scapy | 194.543 | 2570 |

Benchmark #3 - Interpreting DNS responses

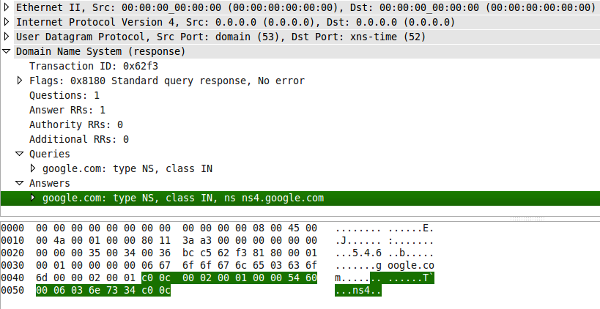

This benchmark measures the speed at which each library can parse DNS packets. The input pcap file consists of 500000 copies of the following packet:

As you can see, there are three domain names present and two of them use compression. For this test case, the codes used not only parse the packet and construct the appropriate DNS object when required, but also iterate over both the question and answer records so as to force the libraries to parse the domain names included in them.

The results of this benchmark were the following:

| Library | Time taken(seconds) | Packets per second |

|---|---|---|

| libpcap | 0.036 | 13888888 |

| libtins | 0.391 | 1278772 |

| pcapplusplus | 0.724 | 690607 |

| libcrafter | 13.524 | 36971 |

| dpkt | 23.319 | 21441 |

| impacket | 36.487 | 13703 |

| scapy | 374.637 | 1334 |

Conclusions

As you can see, libtins is faster at interpreting packets than the rest of the libraries tested. Moreover, it has very little overhead compared to libpcap, which does not perform any packet interpretation at all.

More benchmarks will be added soon.